Refactoring 20 year old code...

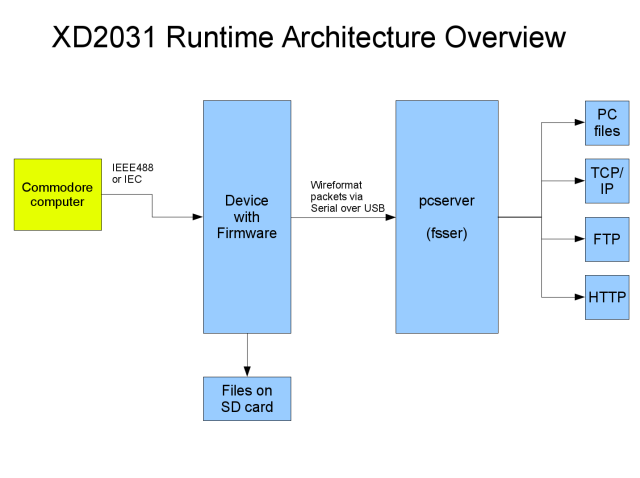

When I was working on my selfbuilt 6502 operating system, I had a file system server on the PC, connected to the 6502 via a serial line (RS232). It was using a generic, packet-based protocol on the serial "wire", so I decided to reuse the protocol and the server for an AVR-based implementation of a Commodore disk emulator. The Commodore would talk to the AVR via IEEE488 or the CBM serial IEC protocol, which would behave as a disk drive and use the wire protocol to talk to the PC to server files from there. In the beginning it looked like a good idea. But it took much more time than expected. Read on for the full story.

The motivation to build an AVR-based disk drive for my Commodore computers is just that I like to use the original machines, but do not want to use the original mechanical drives for fear of wear and breaking. Also this was my common mode of operation since long, because one of the first things I did with my C64 was to equip it with an IEEE488 interface, so I didn't really need any fastloader - and used first my Atari ST and later the PC with a printer-interface as disk drives. However, the printer interface broke, so I had to look for a replacement, and an AVR-based solution looked "hip" :-)

First I looked at existing solutions - however, they were all but one targeted at controlling a disk drive from the PC - but not the other way round using the PC as disk drive. And the one I found (sd2iec) was not as flexible for what I had in mind. That led to writing a complete new AVR firmware - however still basically using the original server software.

This server code, however, was showing age. It was developed in a time when malloc() calls were heavy - so many lists were simple fixed-sized tables. It was just possible to map server filesystem directories to a "disk drive", and a file was a file. No translation was taking place. But in the meantime disk images could be handled as pseudo-drives, as well as TCP, FTP and HTTP connectsions. But still it didn't fulfill modern requirements any more. Those required the ability to translate "P00" files into their native names for example, or using D64 files from the net.

So I decided to do a big Refactoring. Almost a year ago I branched the code and started working on this new structure. This included making many of the table-based structures malloc-based, but its main feature was the ability to recursively stack providers and handlers. Providers are modules that provide endpoints - an endpoint basically is a directory that can be used as drive from the Commodore machine. The "fs" provider for example allows to assign directories to disk drive numbers. A handler matches file names and translates them. For example so-called "P00" files are abbreviated, PC-DOS compatible Commodore file names that include the original Commodore file name in the beginning of the file. You would expect this original file name to appear in a directory listing, and use it in file name matching.

That looks simple, but the little word "recursively" spoils the story. It means that you can have a D82 file that contains a P00 file that contains a D64 file that itself has another R00 file in it. And you should be able to simply address this with a single file path like "foo.d82/orig_img_p00_name.d64/orig_inner_p00_name".

So I had to do a lot of rebuilding. To achieve this I first removed all but the filesystem providers, added a P00 handler and worked on the recursive algorithm. The main difficulty here is that you can't just give a path to the filesystem provider - you need to check each directory level whether the file exists, and needs a specific handler. Then you have to wrap it into the handler and continue the directory drill down on that wrapper. After this had been done, I was able to first include the disk image provider, and then the other providers back into the code.

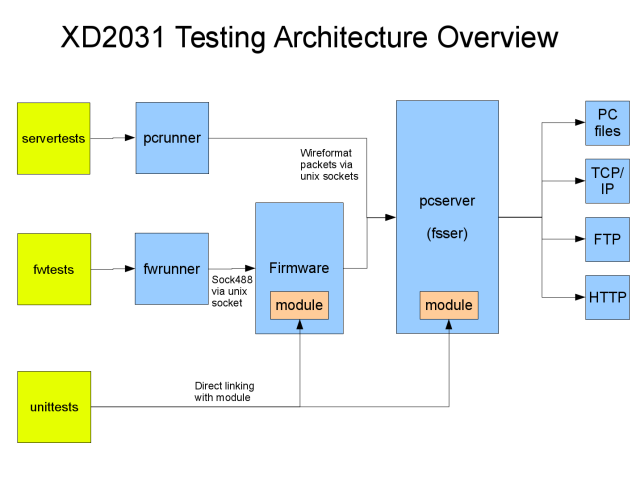

In this process I found it very tedious to test all the different combinations with the real hardware connected. So what I did - and what I had planned for long but didn't get to it - was create a PC-based firmware build. This would communicate with a test script runner as well as with the server software via Unix sockets. So I was able to drive the firmware with test scripts while observing the firmware and server behaviour even without real hardware! And to make things even better, I devised a VICE emulator patch so that it records test scripts from programs running in it. I.e. you run a small BASIC program in the emulator with full (IEEE488) disk drive emulation, and have a test script for the firmware!

These test scripts helped a lot in testing and ironing out some bugs in the code, even though they don't (yet) cover all of it. What is even more important, it makes the tests repeatable. I.e. after each change you could run the full test suite and check what got broken - and especially with which change!

So what did I learn? Refactoring old code can be a tedious project. Luckily even the old code was somewhat modular, so some changes could be tackled piece-by-piece (and are still not completely finished). Other parts are more disruptive. I managed to handle those also because the code already was somewhat modular. Tackling one provider after the other is easier than having to do all at the same time.

Because this all happened in my spare time, it still took almost a year. But the result is worth it. A modular, fully testable file system server, with stackable file type handlers allowing for example handling d64 files in p00 files (and later in zip files).

André

P.S.: you can find the code at https://github.com/fachat/XD2031.